I’ve had cause to think about creativity and consciousness lately. Since February, I’ve been doing contract work as an LLM (large language model) AI trainer. Working with AI models on reasoning, creativity, accuracy, and logic has allowed me see the beauty of consciousness in a new light and stirred up a snake-nest of questions about what makes art true art.

What is it about experience that enriches story?

How does memory play into the picture?How does the chemical surge of dopamine, serotonin, epinephrine, oxytocin influence the push to master a craft, communicate with another, help someone, be helped by someone, love, learn, and mature?

Of course, these

aren’t new questions (or even close to all of them), though they might be new

to me.

As a creator, and as a human with a strong goal-oriented drive, I strive to be the best writer I can be. I want to master my craft. I want to take great pride in what I start and in what I finish. And mastery is a lifelong pursuit. I will always have something new to learn, some different technique to try, a better way to put down what I want to say. For stories, for novels, for blogs even, I want to do the work using my own brain, my own thoughts, my own ideas. I find joy in that. But this doesn’t mean that I don’t look for inspiration outside of myself at times. After all, where do ideas come from? (That’s one of the most frequently asked questions a writer gets.)

The truth is, ideas come from anywhere and from everywhere. The trick is keeping ears and eyes and mind and heart open to hear, see, recognize, and love them when they show up.

As an artificial

being, can an AI model do those things? Is an AI model actually intelligent?

Publishing house founder, Jean-Cristophe Caurette, was quoted by a friend of mine as saying, “Firstly, let’s be clear: ‘Artificial Intelligence’ has no more intelligence than a cactus. The term ‘assisted plagiarism’ is much closer to the truth.”

(Which makes me

wonder how much intelligence a cactus has.) Which leads me to think about how

we measure intelligence.

According to an article titled “Defining and Measuring Intelligence,” the definition of intelligence, as reached through the work of two scientists who sorted and synthesized over 70 definitions to create a single succinct definition, is as follows: “Intelligence measures an agent’s ability to achieve goals in a wide range of environment” (Legg & Hutter, 2007).

So yeah, based upon that definition, an AI model can be intelligent. Or, can it? How do we define goals? What do we mean by environment? What makes an agent an agent? How wide of a range of environment are we talking about here?

Intelligence, in the natural world (now I’m thinking again of cacti), is often linked to adaptability. The Greek philosopher, Heraclitus, in among the rest of his doctrines, posited the idea of “universal flux.” Everything changes. So, with that in mind, all things must change too. Or else. Going further down that pathway of thought, I pause to acknowledge Darwinian theory as summed up in a speech given in 1963 by a Louisiana State University professor in which he said, “According to Darwin’s Origin of Species, it is not the most intellectual of the species that survives; it is not the strongest that survives; but the species that survives is the one that is able best to adapt and adjust to the changing environment in which it finds itself.” From that place, focusing on adaptability, I can note and be astounded with how humans have built cities in inhospitable places (Las Vegas and Dubai as two examples), have withstood invading pathogens and prevented them from wiping us out completely through the use of new understanding (such as washing hands to prevent the spread of disease and the development of vaccines), and are evolving in ways that defy our primal, animalistic and biological urges (as an example that highlights how adaptability is seen by some as a way that harms our species, we can look at women or men choosing not to procreate for their own personal reasons). Serial entrepreneur Tom Bilyeu says that humans are “the ultimate adaptation machine.” If we don’t adapt, like so many other natural things, we die. How do AI models adapt? Can they? If the human prefrontal cortex doesn’t fully develop until a person is twenty-five years old, does that mean AI models can grow up faster than we can? (I suppose that depends on how well they are trained.)

As AI models are

taught by creators such as me and become more adept, will AI models shove

creators out of their workplaces? Will that vibrant-flaming-core within the

human soul be blown out by some evil robotic overlord? Will those assisted

plagiarists destroy humanity as we know it? Are all creators, human and

otherwise, plagiarists assisted by all they’ve experienced, learned, and sucked

in? Maybe so.

That said, plagiarism—as in stealing someone else’s ideas/words/works and presenting it as one’s own, is something I am 100,000 percent against. Make your own art. Do the work. Cite your sources. Be proud of what you do. Pursue mastery. As a writer who would love to see myself and other creators being able to live off our creations, I acknowledge the struggle people have with letting machines do the things that make us human. Especially the creative arts. Additionally, I recognize the ways in which AI influence has greatly increased the workload of educators as they now have to ensure (by putting handed-in assignments through AI detectors and plagiarism checkers, by giving handwritten tests in class rather than allowing students to take assignments home, etc.) that students are actually learning, thinking, and producing their own work.

Is it learning if a student doesn’t do all the work on their own?

If I have to write a resume and I use an online template and examples of great resumes, commandeering words and framing them around my own experience, is that not doing the work myself?

What’s the difference between that and a student utilizing online AI resources?

(That would, I suppose, largely depend on what the output was and exactly how the student utilized available AI resources. Obviously, there is no one-size answer that fits all.)

The human brain, in

its primal quest to stay alive, seeks out the laziest method and the path of

least resistance in order to conserve energy for when it might be absolutely

necessary. Later on. Because why waste a resource? Why burn that calorie if you

might need it to run for your life? It’s safer to be sedentary. But we’ve

learned to override our primal brain. Are we more human or less human if we do

so?

Can an AI model be lazy? Can an AI model be truly creative? How does an AI model learn?

One of the other AI model trainers once mentioned in a conversation thread that some of these models are like babies. They’re not smart yet. They’re not grown up. They’re constantly learning. But how do AI models learn and grow? Can they? It is programming or learning? What’s the difference? What is real and what is imagined?

I’m suddenly reminded me of my three-and-a-half-year-old niece. One day, I was over playing with her and my five-year-old nephew. My brother had brought the kids their lunch and given both of them and me our own bowl of fruit.

At one point, my niece was being silly, stuffing strawberries in her mouth until her cheeks bulged out.

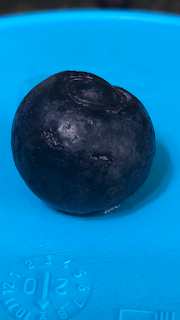

I said something like, “You eat all those strawberries, you’ll become a strawberry. You eat all your blueberries, you’ll become a blueberry.”

She processed my words and reacted in some charming three-year-old way which I can’t quite remember.

Then a bit later, she transferred her blueberries to my empty bowl.

“Those are your blueberries,” I said. “You have to eat them. I already ate all mine.”

“No, ju,” she said, pointing at me.

So then I pretended to eat one and handed her two, “One for me, two for you. Two for me, three for you.”

With the precious wicked gleam children get in their eyes, she scootched over and gathered all the remaining blueberries in her little hands, backed away from me (out of my arms’ reach), and crammed them in her mouth.

After she munched them down, she looked at me and in her still barely English said, “I’m a blueberry.”“Come here, blueberry,” I said, reaching out. “Let me eat you! I’m going to eat you up.”

For a split second, she started to come my way. Then across her face ran a flicker of something like fear. That millisecond of absolute belief that she really was a blueberry, really and truly, and that I would actually eat her up. The thin veil between fantasy and reality. The barrier between the imagined and the actual. A foreshadowing of mortality.

Then it was gone

and she knew where we were and what she was. The game was fun again.

An AI model can’t feel, not like my niece, that something is so very real. It could have a conversation with me about blueberries, but would it ever (once correctly trained) be able to fully imagine what it would be like to be a blueberry to the extent that it could think I would be able to eat it up? I don’t know. Technology advances. So do children. My niece won’t cross that fine line of real and not real forever. Not fully. Not like she did that afternoon when she transformed into a blueberry. As she grows up, she may be creative and tap into imagination, but to have that true belief again, that may not come to her. (And, if while in our own shoes of believing, would any of us want it to come to us?)

The human brain

and human consciousness are beautiful things. And we’ve found so many different

ways to adapt to, change, and influence the world around us. AI is likely going

to be a big part of our future. There are concerns. There are issues. There is

fear.

But not everything is all bad.

The ways AI could

be of great service to humankind or the world aren’t limited to one area. AI

models could detect life-threatening health problems (including early detection

of cancer), optimize renewable energy generation, tackle climate change, crack

the protein code, protect biodiversity by enabling interference-free monitoring

of species, and provide assistance to people with disabilities to enable them

to better communicate or interact with the world (such as through voice-assisted

AI devices for the visually impaired).

And while there are certainly problems with AI and the use of AI, we won’t stop being human as it becomes a more prevalent resource anymore than we stopped being human when automobiles replaced horse-drawn carriages.

As I work, as I

write, as I live, I think about the wonder we, as humans, can experience. That

gift of consciousness that lets us create, grow, and feel. The incredible complexity

of human individuality that makes the joy of technological advancement thrill

one person and the chance of seeing a blue whale surfacing near San Diego thrill

another.

When it comes to AI and the work I’ve been doing, I don’t have all, some, or even many of the answers, but I do know that there’s no one right way to be creative in the world, no one single unchangeable way of existing, no one single stream of consciousness that speaks for us all.

https://louis.pressbooks.pub/intropsychology/chapter/defining-measuring-intelligence/ - Defining and Measuring Intelligence

https://www.snopes.com/fact-check/darwin-strongest-species-survives-adaptable/